guest@monty.sh:/public/posts$ f="Extolling the virtues of the construction of Forth Variables"; "date -r $f -u +"%Y-%m-%d" && cat $f

2025-01-08For the last week or so, I’ve been working on building my own Forth core for the RV64G/C target. Now, Forth and my strange infatuation with it are both pretty long topics, ones that I’ll refrain from speaking myself hoarse over at this juncture. But recently I was speaking with a colleague of mine about the efficiency of how certain implementations choose to implement the dictionary, and specifically how implementations are encouraged towards extremely efficient optimizations by the nature of the language design itself.

I won’t describe how a Forth environment works, because that’s an entire book unto itself. But for the sake of completeness I will briefly how Forth’s dictionary itself works.

At its core, a Forth dictionary is a singly linked list of software components - they may be functions, constants, variables, or other things entirely. The actual form and layout of these components in memory is implementation dependent, but the general idea is that the header contains a link somewhere to the code to be executed.

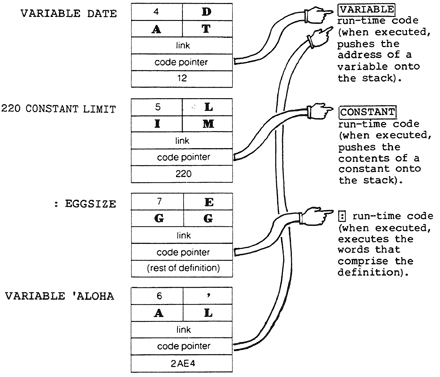

(From Starting Forth, Chapter 9)

(From Starting Forth, Chapter 9)

The very first entry in the above image is the one I want to talk about today, but note that all these definitions exist in the same linked list, and there’s no requirement stating they must have some field to differentiate them… so how do they all do these seemingly different things?

In reality, all these concepts — variables, constants, and executable code — are one and the same. The image already spoiled my point, but to reiterate, a variable is just an executable entry that “returns” the address of a read-write memory location. A constant “returns” the value itself. And code, well. Code just runs.

In a sense, it’s a kind of polymorphism, in the way that the same interface is used for different underlying form, but instead of happening on the level of data specified by the programmer, it happens on the level of the language and environment itself! To put it in more “modern” terms, here’s a little pseudocode snippet that explains the logic (with a caveat I’ll get to later!). It should be familiar to anyone who knows Java and C.

interface ICallable {

void call()

}

List<ICallable> dictionary = new List<ICallable>();

class PrintProcedure implements ICallable {

void call(Stack* s) {

printf("%d", s.pop());

}

}

class MyVariable implements ICallable {

double inner = 0;

void call(Stack* s) {

s.push(&inner)

}

}

(I’ve discarded the linked-list concept for this example because it’s unimportant - the thing to focus on is the interfaces!)

Of course, this type of polymorphism you’re used to comes with a cost — usually in the form of a vtable. For the uninitiated who don’t want to read the wiki page, a vtable is a data structure that helps disambiguate implementations from each other when multiple compatible class forms could be stored in an abstract-type container. It’s an incredibly insufficient oversimplication, but for the purposes of this post it’s safe enough to think of a vtable as the following pseudocode:

ICallable i = get_instance();

switch(classof i) {

case(PrintProcedure):

run_print_procedure_variant();

case(Variable):

run_variable_variant();

// ... rest of cases for each concrete implementation of the interface

}

(Yes, I know it’s not one big single switch case in reality. This is an oversimplification to drive my point - if you want a deep explanation, go check the wikipedia article.)

In essence, the vtable allows the software you write to call the correct instance method when multiple type-compatible classes are possible. A lot of work has been done all the way from the compilers to the processor architecture itself to mitigate this cost and make virtual method dispatch as speedy as any other call, but the fact remains that it’s still an extra step the computer has to take, and thus more work for it to do.

Anyways, the most interesting part is, in most Forths a vtable simply isn’t needed. Because the Forth programming model specifies invoking a variable pushes its address to the stack for further manipulation, there’s no semantic difference and no branching required in its implementation — in this way, the Forth “engine” simply needs to know how to search through the list for whatever word it’s looking for, and once it finds it, the engine simply unconditionally begins executing the code pointed to by the entry - in the above example, that would be a dumb, no-context-required invocation of call() on the matching entry. Plus, at the end of the day, even if a vtable is needed, it’s easy to transparently implement one thanks to Forth’s self-building, self-modifying nature.

It is also possible to argue that the dictionary itself performs the work a vtable would, but even that is an efficiency gain in itself: the dictionary must be present in a Forth, or else it wouldn’t be a Forth, so why not implement it optimally?

The design decision becomes even more interesting with a real-world implementation. Here’s what my own RISC-V assembly implementation of a variable structure:

// Header snipped for brevity.

.option norvc

auipc t0, 0

addi t0, t0, 24

sd t0, -8(s0)

addi s0, s0, -8

ret

.dword 0

As well as jonesforth’s implementation ported to 64 bit RISC-V assembly:

// Header snipped for brevity.

.option norvc

la t0, var_name

sd t0, -8(s0)

addi s0, s0, -8

ret

var_name: .dword 0

Jonesforth’s implementation makes use of a label to get the address of the variable cell (likely because that reduces the implementation to a single x86 assembly instruction - push $var_name). This relies on the assembler to resolve the label, which is absolutely efficient from the author’s perspective. My snippet is similar, but adds a single instruction to the mix to enable position-independent allocation at runtime. It uses auipc t0, 0 to store its own address into the register t0, and then it adds 24 to that address. Since the instruction compression extension is disabled by .option norvc, each instruction is guaranteed to be 4 bytes long - that means [auipc t0, 0] + 24 points exactly to the starting address of that 64-bit memory reservation!

Ultimately, both achieve the same thing, although my implementation sacrifices a tiny bit of performance for portability. Of course, the exact implementation isn’t so important as much as the concept - the routine could just as easily return an offset into some allocation table, or any number of other methods of retrieving a memory region!

This, I feel, is where the concept really shines. Both snippets are extremely simple, and jumping directly into them works just like any other executable dictionary entry would! The efficiency of this design cannot be overstated; Forth effectively sidesteps the overhead of dynamic type resolution altogether. Instead, the dictionary’s structure itself enforces consistency, guiding the runtime to behave correctly without requiring additional context. This is the essence of Forth’s brilliance: the simplicity of the implementation is a direct consequence of the simplicity of the model.

This isn’t just a theoretical gain, either. The absence of a vtable or equivalent mechanism translates directly to reduced code size and faster execution. For embedded systems, where Forth has historically found much of its niche, these savings can be critical. Every byte and cycle matters when you’re working within the constraints of a microcontroller, custom hardware, or unknown memory layout.

The method can be a little strange to wrap one’s head around, especially for programmers used to the rigid world of higher-level languages where instructions and data are kept in their own corrals, never to touch forever and ever, but I felt it’s a superbly elegant little optimization that improves not only runtime performance (by removing additional checks the engine might need to do) but lowers implementation effort (by reducing the amount of mental load needed to sort variable definitions from executable definitions).

Of course, it’s not without cost: everything in Forth remains as simple as numeric cells in memory (which is always the case, but even since the days of yore language designers try very hard to make it seem otherwise). Some say this is less than an issue, or even a feature, others say it’s woefully incomplete without OOP or some sort of type system, and other still fall in many places between the two extremes. Regardless of one’s stance, the price paid is the same: additional complexity is foisted on the programmer as it becomes their responsibility to avoid type-related bugs, be it through hacking OOP functionality into their environment or simply working around the limitation with their own creative faculties.

Because this is my personal blog, I won’t hold back from expressing my opinion on the matter (not that I’ve bothered to hold back thus far). Personally, I fall quite close to the “less than an issue” side of the spectrum. Given how incredibly extensible forth is by its very nature, if I need to create data structures and operate on them extensively I can simply write some new words to create and manipulate them — no need to waste time and effort writing boilerplate accessors I’ll never use. And besides, I’m not married to Forth. If I’m using it, I likely need its flexibility and power. If I need deep, complex type-based operations I’d just use a different language.

And that brings me back to the central brilliance of Forth variable’s construction: it is not a “one-size-fits-all” solution, and it doesn’t pretend to be. Indeed, Forth operates like a scalpel in a world of Swiss Army knives, prioritizing simplicity and adaptability over an abundance of built-in features.

This flexibility is emblematic of the language as a whole, not just in in the design of its dictionary. By leaning into its minimalism and composability, it forces programmers to confront the fundamentals of your problem. You end up writing not just software but, effectively, a language tailored to your application. For systems programmers, especially ones like me who enjoy working close to the metal or on constrained devices, this paradigm is less a limitation and more an invitation to craft elegant solutions from the ground up without the baggage of an overly opinionated runtime dictating how things should be done.